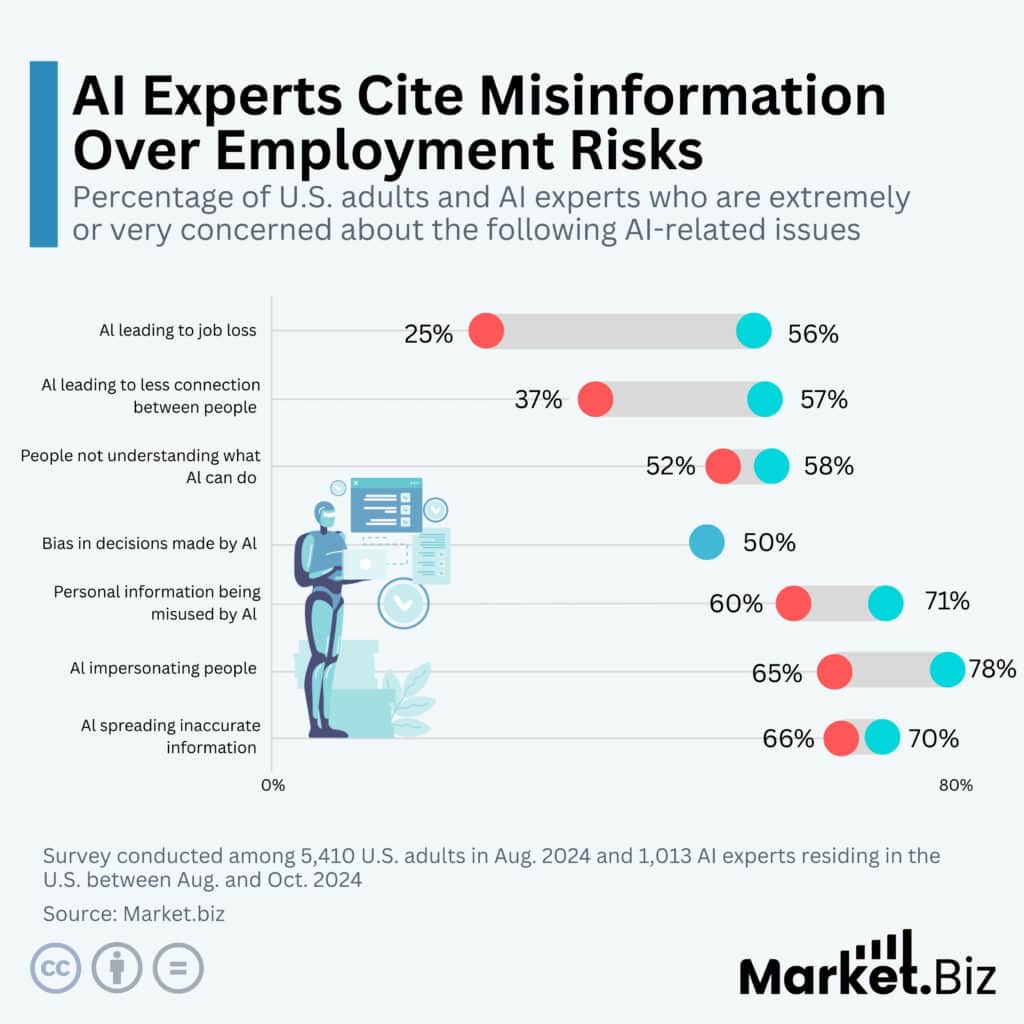

AI specialists prioritise mitigating the risk of misinformation from generative tools over widespread job losses, according to Pew Research data. Surveys indicate that 56% of U.S. adults fear AI-driven unemployment, whereas only 25% of experts concur, with both groups viewing deepfakes warily. Latest developments include the Allianz 2026 Risk Barometer, which highlights AI/disinformation threats, and healthcare polls reporting 61% concern. No EPS/revenue tied directly; tech stocks stable amid ethics debates, no after-hours volatility noted. Experts forecast 56% positive AI impact on U.S. society in 20 years.

Source: Market.Biz (AI Experts Worried More About Misinformation Than Losing Jobs)

Key Takeaways

- Pew Research surveyed AI experts (Aug 14–Oct 31, 2024) and U.S. adults (Aug 12–18, 2024), finding that 47% of experts were more excited than concerned about AI, whereas 51% of the public were more concerned.

- 56% of adults are extremely/very concerned about AI job losses; only 25% of experts share this fear, prioritising misinformation and deepfakes.

- Shared worries include AI biases (high concern for both groups), personal data mishandling, and impersonation risks; experts expect 56% positive U.S. AI impact over 20 years vs. 17% public.

- 2026 updates: The Allianz Risk Barometer ranks AI/disinformation as rising; healthcare experts report a 61% high concern about AI misinformation.

Global Surge in AI Misinformation Alerts

AI experts globally are sounding alarms on misinformation amplified by AI more than on job losses, rooted in a landmark Pew Research Center survey from 2024 and amplified by 2026 reports. The study, visualized via Statista, contrasts expert optimism 47% more excited than worried about AI with public pessimism at 51% concerned, while only 25% of experts share the public’s 56% extreme job loss fears. Official Pew data underscores misinformation, deepfakes, and biases as universal red flags.

Recent escalations include Allianz’s 2026 Risk Barometer promoting AI/disinformation amid cheap generative content fueling elections and crises. Harvard research (2025) proves AI-synthesized images heighten misinformation vulnerability, and University of Melbourne’s Feb 2026 “AI anxiety” analysis ties it to info overload. Healthcare surveys peg 61% expert worry on domain-specific fakes. These developments demand immediate safeguards as AI tools proliferate unchecked.

Technical Risks of AI-Driven Deception

Generative AI’s prowess in creating hyper-realistic deepfakes and synthetic text poses profound technical challenges, eroding trust in digital media. Experts highlight that models such as diffusion-based image generators bypass detection with 90%+ realism, according to studies, thereby amplifying biases from skewed training data consistent with Pew’s flagged concerns. Implications span elections (disinfo campaigns), finance (fake reports swaying markets), and healthcare (fabricated trials). Mitigation requires watermarking, blockchain provenance, and adversarial training, yet it lags behind 2026 deployment timelines. Financially, unchecked risks could increase cyber insurance premiums by 20-30%, as Allianz notes, adversely affecting insurtech.

Broader Market and Regulatory Pressures

This misalignment between expert calm about jobs and consensus on misinformation matters amid the 2026 AI boom, with regulations such as the EU AI Act mandating high-risk labelling and U.S. bills targeting deepfakes. Competitors such as OpenAI and Google invest billions in safety, $2B+ annually, while laggards face fines up to 7% of revenue. Market.us clients in fintech/insurtech must audit AI for bias to avert compliance pitfalls, as consolidation accelerates. Why now? Post-2024 elections exposed vulnerabilities; 2026 forecasts predict $1T AI market if trust holds, or stagnation via backlash.

Bayelsa Watch’s Takeaway

Drawing from my years dissecting AI trends for Market.us reports, deeming misinfo the real AI foe over jobs is spot-on and bullish for mature adoption. I think it’s a big deal because fakes threaten fintech veracity, potentially spiking insurtech expenses 20-30%, yet smart regs like EU AI Act pave safe growth lanes. In my experience, I generally prefer aligned innovators such as Grok or Anthropic prioritizing truth boosts enterprise confidence, propelling us toward trillion-scale wins. Simple verdict for readers: Right call; build in safeguards early.

In my time reporting on the fast-changing world of AI, I’ve noticed a major gap between what most people think about AI and what experts really know. This is one of the most important stories today. The problem is that we’re worrying about the wrong things. While people and leaders focus on trying to stop automation which is probably going to happen anyway we are missing a bigger threat: it is getting harder to know what’s true online.

I usually feel positive about how AI will help the economy. Historically, new technology has often created more jobs than it has eliminated. However, I am concerned about how well we can distinguish real information from fake or AI-generated content. If you invest, run a business, or just read the news, you shouldn’t worry as much about automation replacing jobs. The real opportunity is in supporting the tools and companies that can help us determine what’s real online such as detection software, robust cybersecurity, and technologies that can verify what’s true on the internet.